How do you advance machine learning? Teach them Beethoven and Bach

MusicNet is a new dataset of classical music that aims to teach compositions to machines to advance machine’s learning, understanding and compositional capabilities.

MusicNet is a large dataset created by University of Washington (UW) researchers to teach machines the structures, melodies and nuances of classical music. This isn’t the first time we’ve taught music to computers but with MusicNet it’s hoped that they can advance the technology in new ways with even more intricate learning.

MusicNet’s dataset of classical music is publicly available and comes with curated fine-level annotations that allow machines to take a deeper look at how the music is created and played. The researchers hope that with MusicNet they can advance various elements of machine learning like note prediction, automated music transcription, and most exciting (in my opinion) recommendations based on audio rather than related tags.

UW’s associate professor of computer science and engineering and of statistics, Sham Kakade said: “At a high level, we’re interested in what makes music appealing to the ears, how we can better understand composition, or the essence of what makes Bach sound like Bach. It can also help enable practical applications that remain challenging, like automatic transcription of a live performance into a written score.”

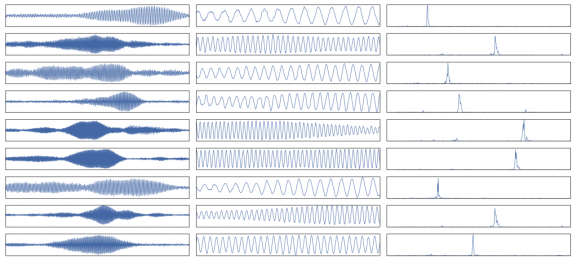

MusicNet’s 330 freely-licensed classical recordings feature over 1 million annotated labels to indicate the time of each note precisely, down to the millisecond. The labels also help machines to understand the use of different instruments to create a song as well as each note’s relation to it’s position in the structure of the song.

All of the labels have been verified by trained musicians to make sure that they are accurate and providing the machine’s with the most precise information for the best learning. The dataset works similarly to ImageNet, a similar large-scale database of images that has helped lead to the computer algorithms we have today that can detect faces, objects and more to the point where we now have self-driving cars that can detect every object around them and what they are.

Assistant professor of statistics at UW, Zaid Harchaoui said: “The music research community has been working for decades on hand-crafting sophisticated audio features for music analysis. We built MusicNet to give researchers a large labelled dataset to automatically learn more expressive audio features, which show potential to radically change the state-of-the-art for a wide range of music analysis tasks.”

Using a technique known as Dynamic Time Warping, which detects similar audio at different speeds, to allow the machines to delve deeper into the music than any musician ever could. Lead author on the project John Thickstun said: “You need to be able to say from 3 seconds and 50 milliseconds to 78 milliseconds, this instrument is playing an A. But that’s impractical or impossible for even an expert musician to track with that degree of accuracy.

“I’m really interested in the artistic opportunities. Any composer who crafts their art with the assistance of a computer – which includes many modern musicians – could use these tools. If the machine has a higher understanding of what they’re trying to do, that just gives the artist more power.”

You can find out more and make use of MusicNet’s large classical database yourself by visiting their website: homes.cs.washington.edu/~thickstn/musicnet.html